The spread of misinformation online has been recognized as a growing social problem. In responding to the issue, social media platforms have (i) promoted the services of third-party fact-checkers; (ii) removed producers of misinformation and downgraded false content; and (iii) provided contextual information for flagged content, empowering users to determine the veracity of information for themselves. In a recent staff report, we develop a flexible model of misinformation to assess the efficacy of these types of interventions. Our analysis focuses on how well these measures incentivize users to verify the information they encounter online.

A “Supply and Demand” Framework

Our model features two types of actors: users that view news and producers that generate news. Users benefit from sharing true information but suffer losses from sharing misinformation; this is a modeling choice that intentionally reduces the chances that false content will be transmitted.

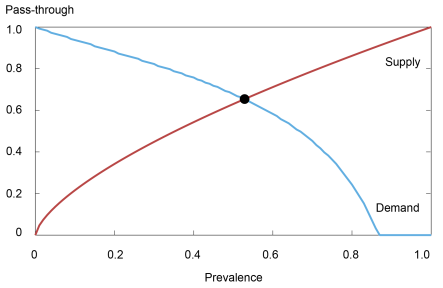

When encountering a news item, a user can choose to determine its veracity at a cost (of time and effort, for example) before deciding whether to share it or not. The decision to pursue verification thus involves weighing the cost of achieving certainty (by consulting a fact-check article, for example) against the risk of sharing misinformation. The probability of the latter is determined by the prevalence of misinformation on a given platform, which is determined by supply and demand forces. Specifically, producers of fake content benefit when users share such information without first bothering to verify it. As the fraction of these users increases, so does the pass-through of misinformation, thereby incentivizing the production (and hence prevalence) of false content, resulting in an upward-sloping “supply curve” of misinformation. Conversely, users are less likely to pass on unverified news when the risk of encountering fake content is higher. As the prevalence of misinformation increases, fewer users then engage in such unverified sharing, resulting in a downward-sloping “misinformation pass-through curve.” Much like a standard supply-demand framework, equilibrium misinformation prevalence and pass-through emerge at the point of intersection of the two curves, as visualized in the chart below.

Equilibrium Prevalence and Pass-through of Misinformation on a Social Media Platform

Examining Users’ Incentives

Our framework enables us to assess the efforts that platforms have undertaken to counter misinformation. In particular, we focus on how these measures might affect users’ incentives to verify the news they see online.

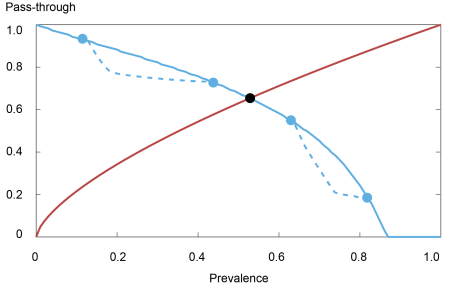

1. The extent of unverified sharing can be insensitive to reductions in verification costs. If it became cheaper for users to determine the veracity of any given news item, one would expect an inward shift in the misinformation pass-through curve, ensuring a drop in both prevalence and pass-through. As we show in our paper, however, for any decrease in verification costs, there are always levels of prevalence at which unverified sharing behavior is insensitive to such changes. The following chart shows an example of a misinformation pass-through curve that only shifts inward at two disconnected regions of prevalence—leaving the equilibrium unchanged

The Misinformation Pass-through Curve Need Not Shift Inward Everywhere after a Reduction in Verification Costs

From that example, we can see that there is no inward shift for low levels of prevalence—this is natural, as the risk of sharing misinformation is low in those cases. What is interesting is that there is no shift at intermediate and high levels of prevalence either, where the risk of sharing fake content is not small. Indeed, as we show in the model, it is possible that around those levels, a reduction in verification costs has the “unintended” effect of inducing verification by users who were not originally sharing content, but not by those who were already sharing unverified information. Put differently, such a policy induces “entry” by users who originally found it too risky to share when verification was prohibitively costly for them—but since their sharing decisions are driven purely by the possibility of verifying news, the pass-through of misinformation is unchanged.

Importantly, our research finds that the location of such “insensitive” regions depends critically on the way in which the benefits and losses associated with misinformation vary across the population. An examination of such distributions is then a key empirical question for assessing whether fact-checking initiatives—which lower the costs borne by users when searching for information to validate news—can be effective at combating misinformation.

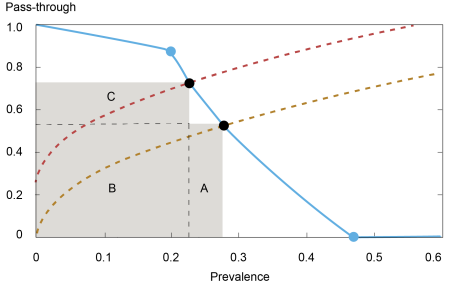

2. Supply interventions can increase the diffusion of misinformation. Various policies currently in place attempt to reduce the profitability of peddling false information, thereby reducing its supply (and prevalence). However, the pass-through of fake content increases because users’ verification incentives are weakened in the process, as news items become more likely to be truthful. The diffusion rate of misinformation—prevalence times pass-through—captures the chance that misinformation is shared among users. The next chart depicts a misinformation pass-through curve that exhibits verification by users (notice a steeper decline—due to an inward shift—at prevalence levels from 0.2 onward), but where the extent of diffusion (areas B plus C) increases after the supply of misinformation is curtailed: specifically, the decrease in diffusion due to lower prevalence (area A) is smaller than its increase due to a higher pass-through rate (area C).

A Reduction in the Supply of Misinformation Leads to Greater Diffusion when Verification Is at Play

Importantly, our analysis identifies conditions under which the pass-through curve is sufficiently “responsive” to such supply changes so that the diffusion rate increases after the intervention; and it also examines when a reduction in verification costs generates a more responsive pass-through curve, thereby providing conditions under which joint policies can reinforce each other negatively.

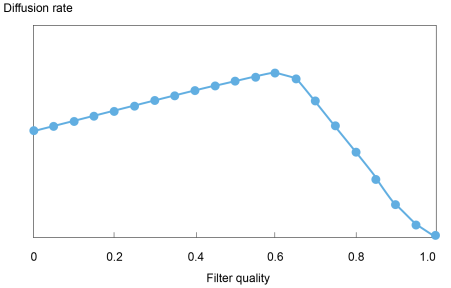

3. Detection algorithms that remove news for users can backfire. We also evaluate the efficacy of internal filters that assess the veracity of news articles, removing those that are deemed false before they reach users. In this context, we show that the introduction of imperfect filters can actually increase the prevalence and diffusion of misinformation despite the extra layer of protection provided by these algorithms. Consider the case of a filter that makes “false negative” errors (incorrectly deciding that a false news story is true): after a news article has passed through the filter, a user becomes more confident of its veracity and less inclined to verify its content, thereby increasing unverified sharing. This effect can outweigh the reduction in misinformation production that stems from producers correctly perceiving this extra layer of protection as limiting the passthrough of false content—see the next chart, where the diffusion of fake news starts falling only after the filter in place is sufficiently effective.

The Diffusion of Misinformation Can Increase when the Quality of the Filter—the Probability of Detecting Fake News—Improves

Finally, while our baseline model is “competitive” in that it examines a market with many small misinformation producers, we also study the case of a single producer of fake content. In such a market, we show that the traditional exercise of monopoly power in reducing “trade”—here, prevalence—to obtain a larger pass-through rate is hindered by the fact that the prevalence of misinformation is generally not directly observed by users. Thus, the disclosure of prevalence level to users could favor a monopolistic misinformation producer by enabling her to move along the pass-through curve to target a higher pass-through rate.

Overall, our research highlights that the joint analysis of users’ and producers’ incentives is essential for the design of policy interventions in social media. In the process, we have identified some types of data that need to be gathered in order to assess the efficacy of policy interventions: how users’ benefits and losses from sharing news are distributed across populations of interest; how sensitive verification incentives are to changes in prevalence; and how substitutable internal filters and individuals’ verification choices are across various levels of filter quality.

Gonzalo Cisternas is a financial research advisor in Non-Bank Financial Institution Studies in the Federal Reserve Bank of New York’s Research and Statistics Group.

Jorge Vásquez is an assistant professor of economics at Smith College.

How to cite this post:

Gonzalo Cisternas and Jorge Vásquez, “Breaking Down the Market for Misinformation,” Federal Reserve Bank of New York Liberty Street Economics, November 28, 2022, https://libertystreeteconomics.newyorkfed.org/2022/11/breaking-down-the-market-for-misinformation/

BibTeX: View |

Disclaimer

The views expressed in this post are those of the author(s) and do not necessarily reflect the position of the Federal Reserve Bank of New York or the Federal Reserve System. Any errors or omissions are the responsibility of the author(s).

RSS Feed

RSS Feed Follow Liberty Street Economics

Follow Liberty Street Economics